The legal industry stands on one core foundation: authenticity of evidence. Contracts, affidavits, financial statements, identity proofs, property records, expert reports—every decision in a courtroom or corporate legal department depends on the integrity of documents.

Yet today, that foundation is under threat.

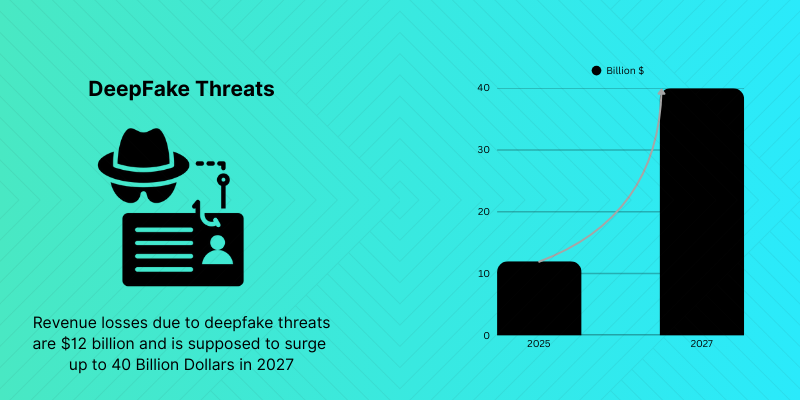

With AI-powered editing tools, synthetic document generators, and hyper-realistic manipulation techniques, forged documents are no longer crude photocopies with visible errors. They are pixel-perfect, metadata-altered, structurally consistent, and often indistinguishable to the human eye.

This is the digital verification gap—the widening divide between traditional document review methods and modern AI-driven fraud techniques.

And this is exactly where TRU. from Atna changes the game.

Understanding the Digital Verification Gap in Legal Workflows

Legal professionals are trained to examine:

- Signatures

- Formatting inconsistencies

- Stamp authenticity

- Notary validation

- Content discrepancies

But today’s fraud doesn’t rely on visible flaws.

Modern tampering includes:

- Metadata rewriting

- Layer-based manipulation in PDFs

- Synthetic ID creation

- AI-generated supporting documents

- Deepfake-backed documentation

- Fabricated employment or financial proofs

These manipations are structural, not surface-level.

Manual review, even by experienced legal teams, cannot detect:

- Hidden editing layers

- Font mapping anomalies

- Digital fingerprint mismatches

- Structural inconsistencies in document architecture

The result?

- Manipulated evidence entering litigation

- Fake supporting documents in corporate disputes

- Fraudulent affidavits

- Synthetic contracts in commercial claims

- Identity misrepresentation in arbitration cases

The legal system wasn’t built to combat algorithmic fraud.

It needs algorithmic defense.

Why Traditional Verification Methods Fail

Most legal verification relies on:

- Visual inspection

- Cross-referencing with submitted copies

- Manual comparison

- Basic PDF property checks

- Third-party verification calls

But fraud today is:

- Fast

- Automated

- AI-enhanced

- Structurally engineered

The human eye can’t detect structural tampering inside document layers.

And when manipulated evidence enters the legal process, the consequences are severe:

- Wrongful judgments

- Delayed litigation

- Reputational damage

- Compliance breaches

- Regulatory penalties

The legal industry requires certainty, not assumption.

Introducing TRU. from Atna – Precision for Legal Proof

TRU. from Atna is built specifically to eliminate the digital verification gap.

It doesn’t just “review” documents.

It evaluates:

- Structural integrity

- Digital composition

- Manipulation markers

- Synthetic generation patterns

- Metadata authenticity

- Layer inconsistencies

- Font signature anomalies

- Tampering footprints

Instead of asking, “Does this look real?”

TRU. asks, “Is this structurally authentic?”

That difference changes everything.

How TRU. Strengthens Legal Evidence Validation

1. Structural Document Intelligence

TRU. scans beyond visible content. It analyzes document architecture—detecting edits, replaced elements, manipulated layers, and invisible tampering.

Even if the document looks flawless, TRU. identifies hidden red flags.

2. Synthetic Identity & Document Detection

With the rise of AI-generated employment letters, income proofs, and supporting records, legal teams face increased risk of synthetic evidence.

TRU. identifies synthetic patterns that manual checks miss.

3. Metadata & Digital Fingerprint Validation

Fraudsters often modify timestamps, authorship data, and document properties.

TRU. verifies whether metadata aligns with the document’s structural history.

If something doesn’t match, it flags it.

4. Litigation-Ready Reports

Legal teams need more than alerts. They need defensible documentation.

TRU. generates structured verification outputs that can support legal scrutiny and compliance review.

5. Real-Time Certainty

Time is critical in legal processes.

TRU. delivers verification insights instantly, eliminating delays in due diligence, arbitration review, and case preparation.

Where the Legal Industry Feels the Impact

The verification gap affects multiple legal domains:

Corporate Law

- M&A due diligence documentation

- Vendor contracts

- Shareholding records

- Financial disclosures

Litigation & Arbitration

- Evidence submissions

- Supporting affidavits

- Financial claims documentation

- Expert reports

Compliance & Regulatory Law

- Internal investigation documents

- Whistleblower submissions

- Policy documentation

- Regulatory filings

Employment Law

- Background verification documents

- Experience certificates

- Compensation records

- Identity proofs

Each of these areas now faces heightened exposure to document manipulation.

TRU. provides proactive fraud intelligence before risk enters the legal system.

The Future of Legal Verification is Structural

As AI tools become more accessible, document manipulation will grow more sophisticated. TRU. from Atna bridges the digital verification gap with precision, clarity, and certainty. In a world where documents can be engineered to deceive, legal professionals need technology engineered to detect.

The verification gap is real.

But with TRU. from Atna, it is no longer a vulnerability.

It becomes a competitive advantage.