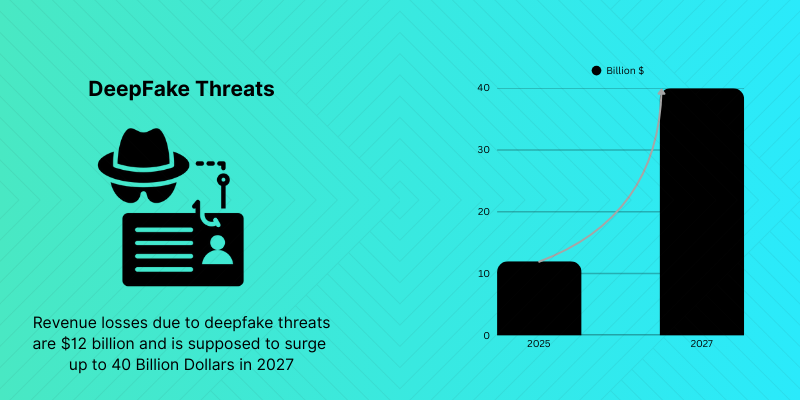

With the development of generative AI, the rise of deepfakes has become huge and has become more hazardous in sectors like banking and insurance. Financial institutions are facing a significant increase in deepfake fraud attempts, which have grown by 2137% in the last three years. The impact of deepfakes on the surface, such as impersonating a celebrity, goes far deeper in enterprise levels. All the deepfake threats, such as mimicked faces, forged voices, fabricated documents, and generated entire digital personas, can easily bypass the scanners and can result in money laundering processes. It not just drops the reputation but also imposes huge financial and operational losses.

This blog explores all the patterns of how deepfake is happening and how big businesses can crumble if they do not equip themselves with the necessary risk intelligence techniques for deepfake detection.

1. Synthetic Identities in Onboarding

Onboarding customers or even employees is a serious process in the deepfake threats era. People can synthesize their IDs and promote a fake one to bypass KYC. Fraudsters use deepfake tools to create entirely synthetic profiles with:

- High-resolution fake IDs

- AI-altered selfies that pass face-matching

- Pre-recorded deepfake video clips mimicking live gestures

- Cloned voice responses for audio verification

With remote onboarding processes on the rise, deepfake threats are best using the situation. With generative scripts and automation, a single user can log into the account and create multiple fake accounts. A single attacker can onboard hundreds of fake profiles across multiple institutions, aided by generative scripts and AI automation.

2. Deepfakes threats in Insurance Claims

The onboarding is the entry point for the deepfake threats; the insurance claims are the exit point where the synthetic identities cash in.

Fraudsters now submit:

- AI-generated videos of supposed hospital stays or staged car accidents

- Fabricated police reports or discharge summaries

- Manipulated evidence of property damage using image synthesis

As far as it is approved, such claims are paid with real money. The deepfake threat is committed by destroying the identity, with little trace to be followed, as the identity verification and burner accounts involved in the onboarding process are usually false, creating consumption fraud committed with deepfake threats

3. Deepfake Threats In Job Applications

The menace does not end with customers. Moreover, deepfake threats are also becoming a mainstream method of attackers getting into an organization as employees

- Pre-recorded deepfake videos to pass HR interviews

- Cloned voices to attend onboarding or training

- AI-fabricated resumes and certifications to match job criteria

When hired, the following activities can be performed by such synthetic employees:

- Approve fraudulent claims

- Leak sensitive customer data

- Manipulate internal systems to enable large-scale fraud.

4. Deepfake Threats Through Voice Cloning

Voice biometrics is another authentication mode that banks and insurers thinks to be safe. Pindrop’s 2025 Voice Intelligence & Security Report Reveals +1,300% Surge in Deepfake Fraud. One of the advanced deepfake threat is the voice technology that may reproduce the mannerism, accent, and tone of a person with as little as 30 seconds

This leads to attacks where fraudsters:

- Bypass voice-based IVR systems

- Call support centers pretending to be customers.

- Request sensitive actions like password resets or fund transfers.

Such deepfake threats are usually very deceptive, to the extent that human agents also fail to realize the fraud.

5. When Synthetic Identities Breach Compliance

Technologically, onboarding a deepfake identity is a regulatory nightmare. When onboarding someone who does not exist, the person will be exposed to

- Fines for failure to comply with KYC norms

- AML breaches if the account is used for illicit transactions

- Audits and reputation loss due to systemic lapses

In extreme deepfake threat cases, foreign partners can blacklist the institution, therefore having cross-border consequences and limiting the operation of the institutions in the long run.

6. The Path Forward for Institutions

Financial establishments have to comprehend that deepfake threats are not a once-in-a-lifetime situation. To pass this wave, they must adopt key defense strategies include

- Liveness detection during video KYC to spot synthetic video playback

- Behavioral biometrics to monitor unnatural user interactions

- Multi-factor verification beyond facial and voice data

- Device and location intelligence to spot anomalies in onboarding patterns

- AI-driven anomaly detection to flag suspicious claims or actions

Insurance and banks need to develop an awareness culture as they cultivate teams to detect social engineering, video abnormalities, inconsistency in documentation, and so on.

The War on Deepfake Fraud Has Already Begun

It is no longer the age when we can talk of the deepfake threat being a potential risk but a real one already. The deepfake threats are not just reputational risks to the bank or insurance company but also regulatory, financial, and systemic.

Deepfake detection platforms play a crucial role in combating the rising threat of AI-generated synthetic media. By leveraging advanced algorithms and machine learning models, these platforms can identify manipulated visuals, falsified identities, and spoofed audio with high accuracy.

The Hawkings Of Deepfake Combat

As deepfake threats continue to evolve, detection platforms are becoming essential for digital trust and security. Atna leads the way with its robust deepfake detection solutions, empowering businesses to verify authenticity, safeguard operations, and stay ahead of synthetic fraud.

Atna is at the forefront of this transformation. Its AI-powered deepfake detection system offers businesses an edge by providing early warnings, actionable insights, and automated flagging mechanisms that block bad actors before any damage is done. Whether it’s a bank verifying a new account or an insurer checking a claimant’s identity, Atna ensures authenticity is never compromised.